OpenSpace Vision Engine automates turning images into reality capture

Computer Vision: AI that makes sense of digital images

Computer vision is a field of AI that trains computers to interpret and understand digital images and videos. It’s used in self-driving cars, industrial automation, and robotics, to name a few. OpenSpace’s Vision Engine relies on computer vision to automatically align images into a single integrated scene, recognize and label key features, and map them to floor plans, for a rich, visual understanding of the captured environment.

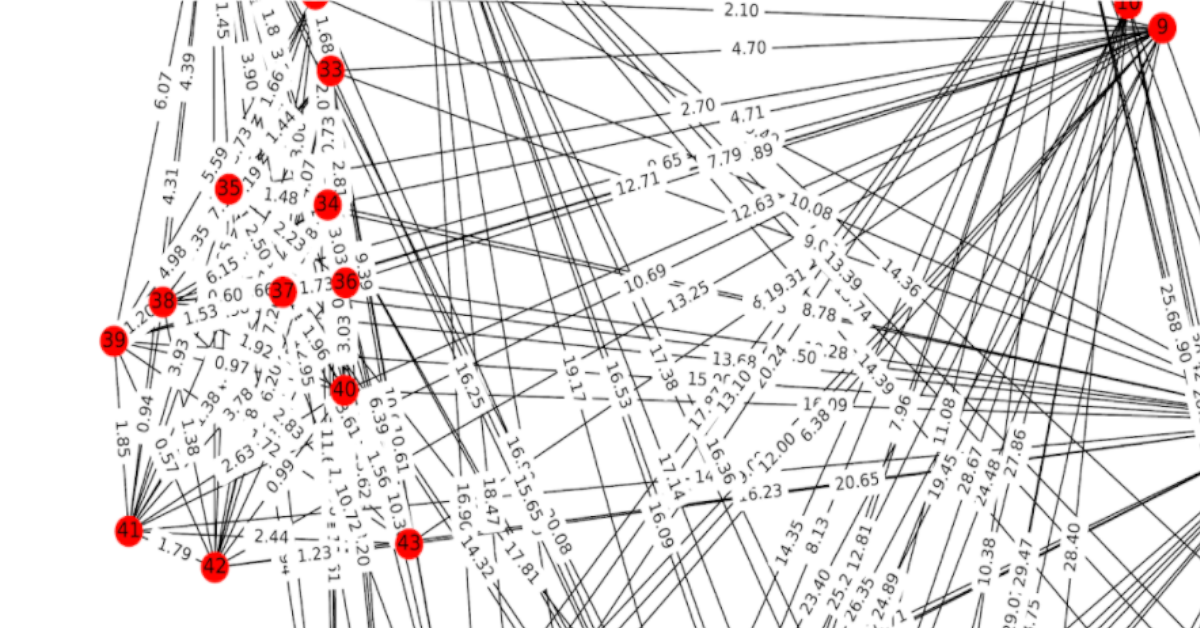

3D reconstruction: creating a 3D point cloud

Our Vision Engine uses 3D reconstruction to locate features in space and recreate 3D environments. It compares features in two images, then computes an estimate of camera position that best aligns those features. This process is repeated thousands of times across a full OpenSpace 360° video capture, creating a 3D point cloud. The point cloud ties a feature in an image to a 3D location in space.

Machine learning: making the Vision Engine smarter

Machine learning algorithms create a mathematical model based on training data to predict future results without being explicitly programmed to perform the task. Our Vision Engine uses each capture and walk track as a training dataset. Every time you walk the site, the Vision Engine learns a bit more about the 3D environment you’re in, aligning and mapping images faster and more accurately.

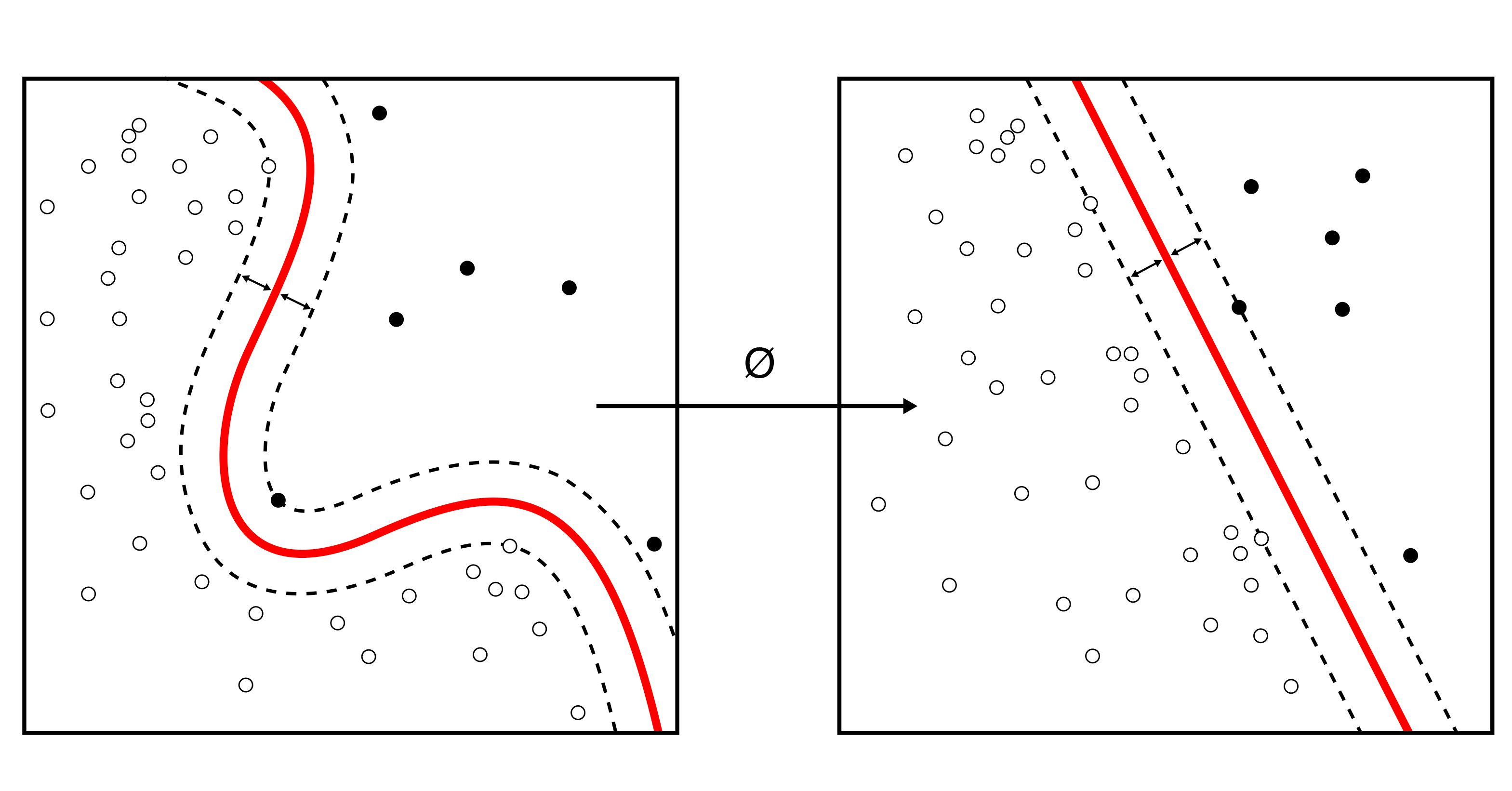

SLAM: aligning images

Simultaneous Location and Mapping (SLAM) is a technique to construct a map of an unknown environment while simultaneously moving through it. SLAM is one of the core algorithms used for self-driving car navigation. OpenSpace uses image-based SLAM to estimate the path of the walker on a floor plan, with algorithms constantly aligning sequential data to estimate position and path.

Interested in learning more?

See OpenSpace 360° reality capture in action.

Advanced analytics find insights in images

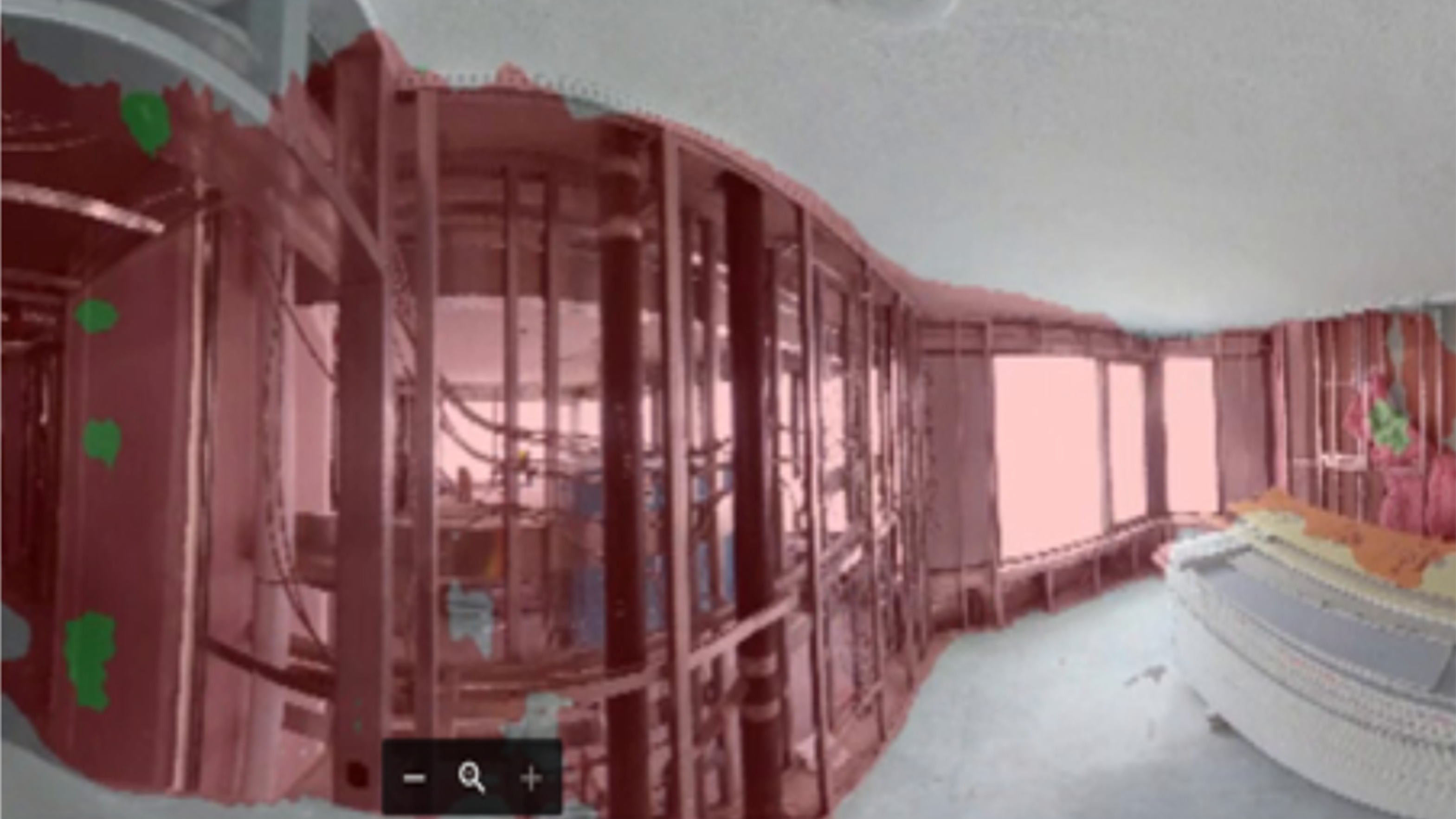

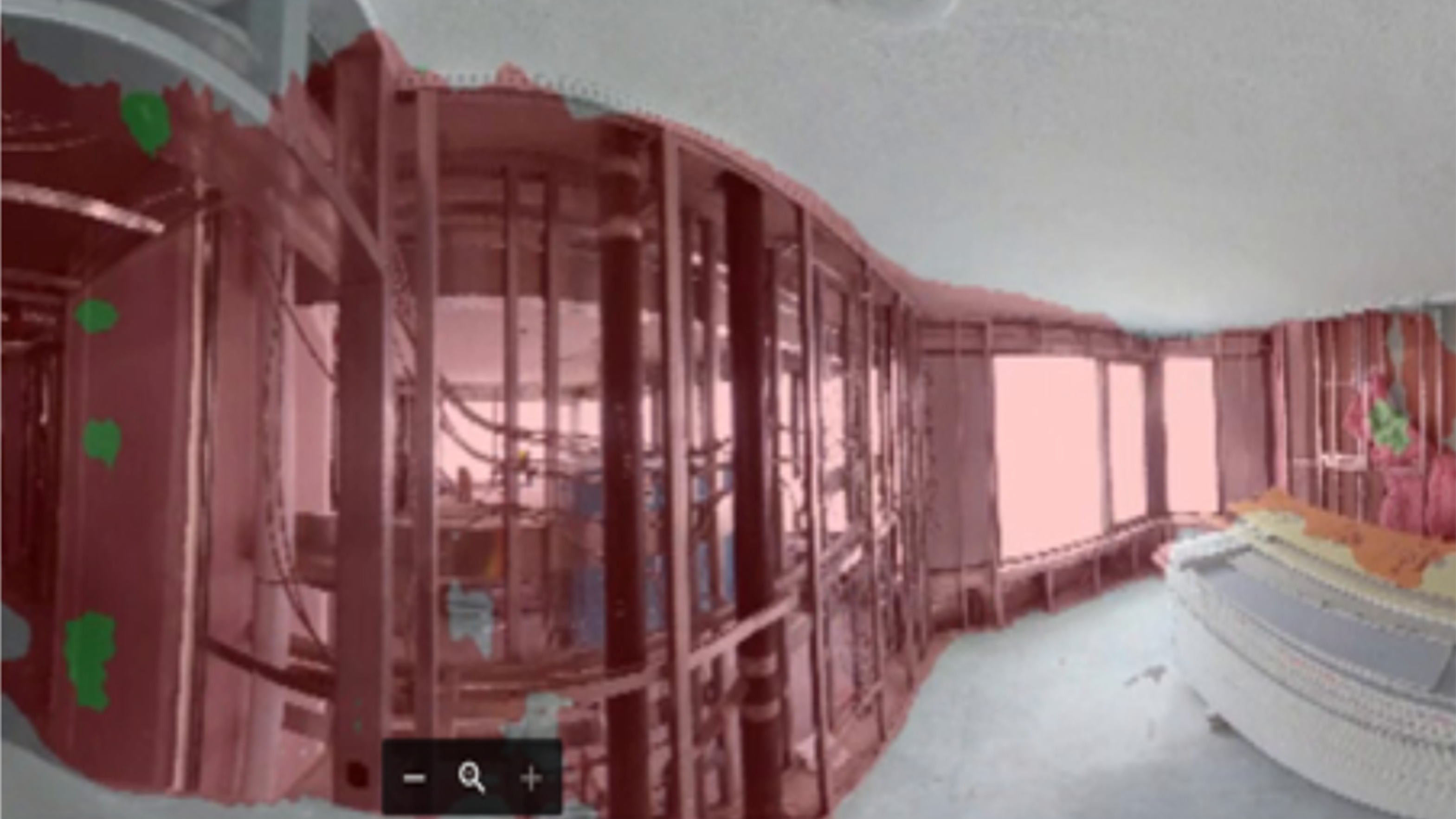

Semantic segmentation: turns images into metrics

Semantic segmentation is the process of grouping pixels together into logical chunks—classifying pixels of a yellow shirt separately from pixels of a red scarf, for example. OpenSpace is using semantic segmentation to develop a series of construction-specific classes and classifiers to transform raw images into logical segments that can be tracked and counted.

Progress tracking: quantify image-based metrics over time

Once images have been processed, aligned, located, and segmented, they can be analyzed to deliver progress tracking. Items located using object detection or classified using semantic segmentation can be located in 3D space using the point cloud and tracked over time. The result is a quantitative map of project activity that OpenSpace uses to verify work in place, maintain trade coordination, and benchmark productivity.

Big data visualization: provides the analytics foundation

Big data visualization is the practice of rendering visual representations of large, complex datasets, making them easier to digest and understand. OpenSpace has a long history developing innovative visualizations, starting with this TED Talk where an MIT researcher describes how he recorded 90,000 hours of home video to understand how and when his infant son learned new words. It highlights the work of our founders, who were his students at the time.

“Lots of companies claim to be automated, but this really is. I’m usually really skeptical of people who come out with construction tech, because a lot of us are set in our ways. But then they showed me the software and it blew me away."

Tim Crawford, Superintendent

Simple-to-use tools built with complex technology

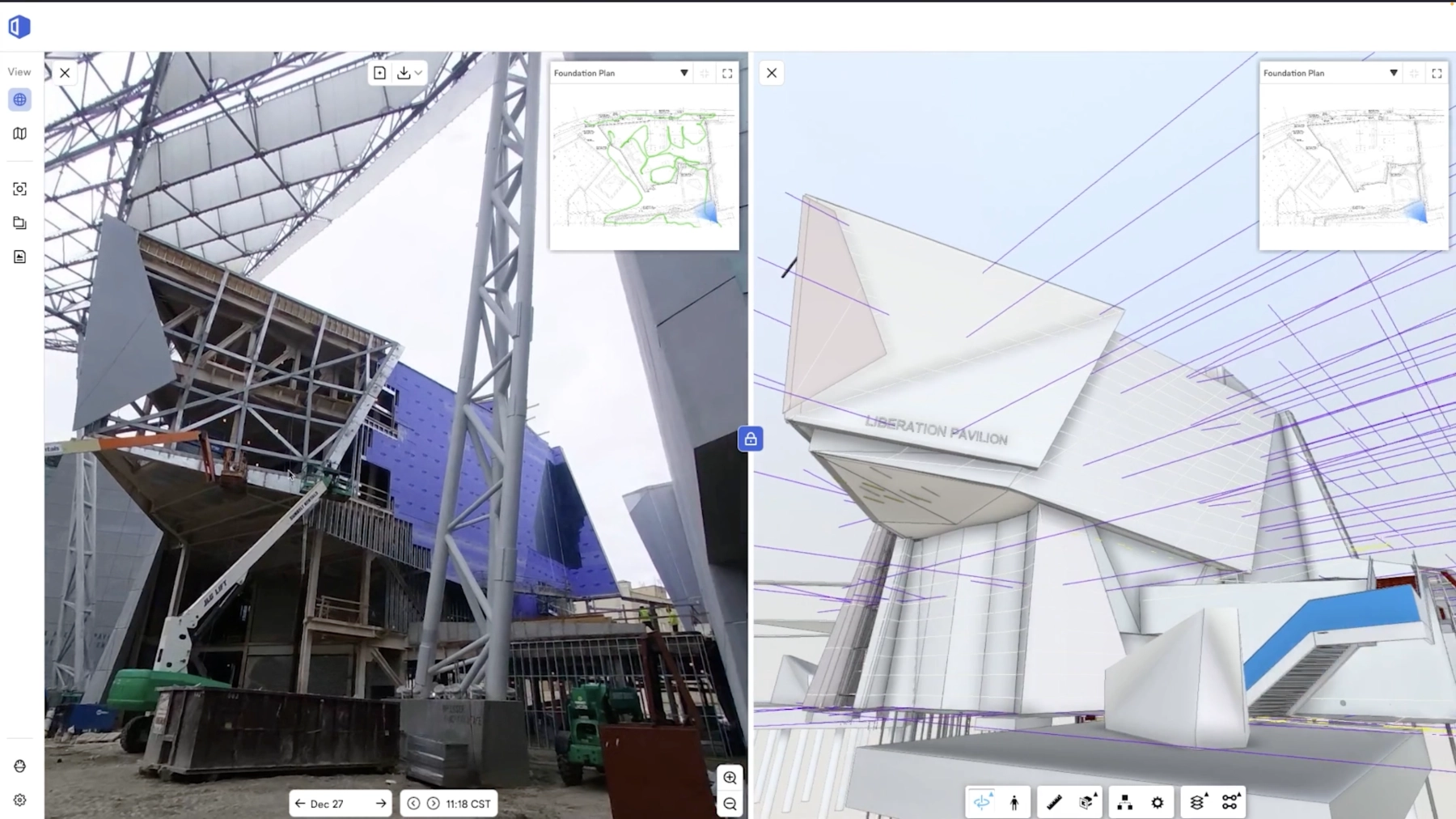

First fully automated reality capture system

With OpenSpace Capture, our Vision Engine stitches images together and pins them to your floor plan, creating a comprehensive and shared visual record of your jobsite.

AI that automatically measures work completed

With OpenSpace Track, image-based data from our Vision Engine is used to segment, classify, and track the progress of drywall, mechanical, electrical, and more.

Advanced technologies are simplifying the work of the built world

Explore how 360° reality capture and AI are simplifying coordination between field and VDC teams at Waypoint 2023.

Beyond the technology

Academy

Continuous learning with free, on-demand courses that include easy-to-follow instructions plus tips and tricks.

Community

Connect, learn, and innovate with construction professionals, and forge the path for the future of construction.

ROI

Access resources for tips on evaluating how reality capture can help you build faster and with less risk.