A deeper dive into Spatial AI & AI Autolocation

September 23, 2025

Michael Fleischman is CTO & Co-Founder of OpenSpace

The most important device on your jobsite is already in your pocket

If reality capture is about seeing, Visual Intelligence—the concept that jobsite imagery, anchored to time and location, becomes actionable—is about seeing, understanding, and acting. AI Autolocation is one of the foundational pieces that makes the jump from reality capture to Visual Intelligence possible: it gives your phone real-time indoor positioning on active jobsites without beacons or special hardware. It’s a patent-pending capability built on our Spatial AI Engine and it comes to life in OpenSpace Field, our image-first system of work.

Builders already capture a ton of visual context with their 360° walks, smartphone photos, and drone imagery. Our Spatial AI Engine organizes these images by time and place and understands what’s in them. This gives you the ability to find what you need quickly and, more importantly, act on it in OpenSpace and in other tools you already use, including through deep integrations with Procore and Autodesk Construction Cloud (ACC).

All of this can now happen with the one device you already carry: your smartphone.

OpenSpace Field turns your smartphone into the front end for field workflows: fast, simple, and grounded in reality. Layer on our breakthrough innovation, AI Autolocation, and you get real-time indoor positioning for the whole team that gets smarter with every capture.

Under the hood: how AI Autolocation works

GPS stops at the door. Satellite signals struggle indoors, and beacon-based “indoor GPS” systems don’t fit the organized chaos of construction. AI Autolocation takes a different path:

- Captures create a sensor map: Your normal 360° walks generate a living model of the site plus a compact fingerprint that ties sensor data to specific spots on the floor plan.

- Your phone streams live signals: As you move (even when you’re not taking a 360° capture) the sensor data from your phone is collected.

- The system matches fingerprints: We compare the sensor phone’s readings to the latest sensor map, estimate your position, and update confidence in real time.

- It learns as the site evolves: Each new capture refreshes the model and map, so positioning keeps up as conditions change.

The result: reliable indoor positioning that adapts as the construction site evolves.

With AI Autolocation, everyday tasks get faster and clearer. Field Notes, observations, and images are auto-pinned to the right spot on the plan, cutting back-and-forth and miscommunication. On complex sites, a live “you-are-here” view helps you get to the right room the first time. And with AI Voice Notes, you can talk through a punch item and our AI creates your Field Note—filling in assignee, due date, and more—so you can see it, deal with it, get it done.

The flywheel in action

Every 360° capture makes AI Autolocation a little sharper. As positioning gets smarter, logging issues gets faster—especially with AI Voice Notes turning what you say into complete Field Notes in the right place. With more, better-placed issues, progress tracking becomes more accurate and decisions get quicker. Those wins encourage teams to capture even more, which in turn strengthens AI Autolocation again. The loop compounds across projects and over time.

From AI Autolocation to spatially aware AI agents

Looking ahead, AI Autolocation lays the groundwork for a new class of assistants we call spatially aware AI agents. While these features aren’t in our product today, they’re an active area of R&D and early prototyping. The idea is simple: if an agent understands where you are—not just what you’re working on—it can become far more helpful.

In internal demos, we’ve explored agents that could quietly watch your context and, as you enter a zone, surface the most relevant nearby items from OpenSpace, Procore, or ACC, along with suggested next steps. We’ve also experimented with “X-ray” moments, where the agent retrieves archival imagery from the exact room you’re standing in, pre-drywall, so you can reason about what’s behind the wall without opening it up. And at the portfolio level, we’re testing summaries that roll visual and spatial signals into an executive snapshot of progress and risk, grounded in reality data rather than documents alone.

These concepts are exploratory and will evolve as we learn with customers. We’ll share timelines and specifics as they mature.

Learn more

- AI Autolocation is available to select customers as part of early access to OpenSpace Field. If you’re a current customer interested in learning more, please fill out our form.

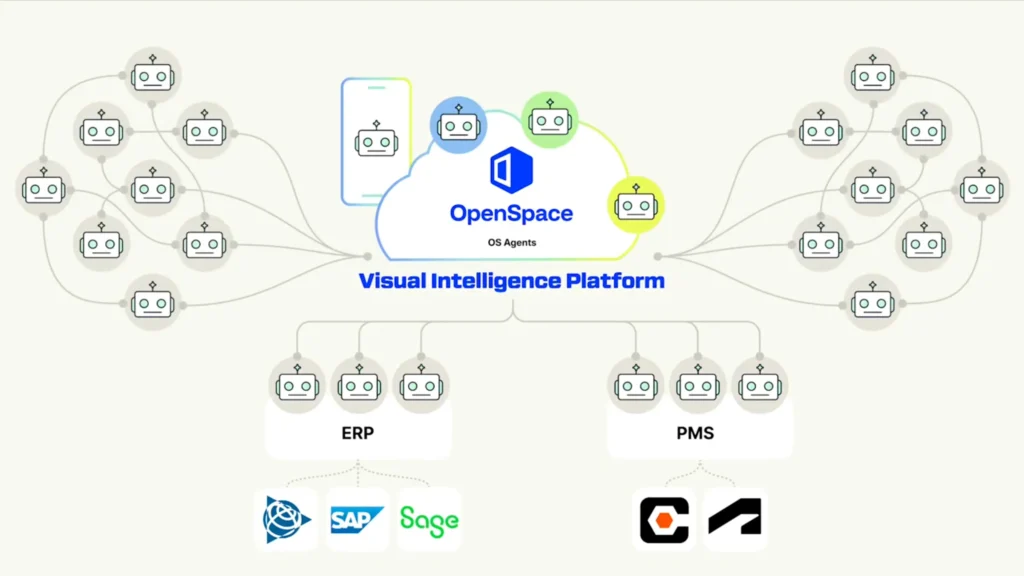

- See our CEO, Jeevan Kalanithi’s blog post, Beyond reality capture: why we built the Visual Intelligence Platform for a “why it matters for GCs, trades, and owners” view.

- To learn more about the tech behind our Visual Intelligence Platform, watch my talk from Waypoint 2025.